Nengo Edge

I am the team lead and primary developer for NengoEdge, a cloud-based application for training and deploying audio processing models on low power edge hardware. NengoEdge exposes powerful configuration options and detailed metrics to guide users, while being accessible to non-experts.

The idea behind NengoEdge is to take the complex methods we have developed inside ABR for training and deploying high accuracy audio models on low power hardware, and package them up into an easy-to-use web application. This allows companies to build state-of-the-art audio processing into their edge applications, without requiring large in-house development teams.

Nengo DL

I am the author/maintainer of NengoDL, a package that integrates deep learning methods with the Nengo neural modelling environment. NengoDL allows Nengo models to be optimized using deep learning training methods, and improves the simulation speed of Nengo models on CPU or GPU.

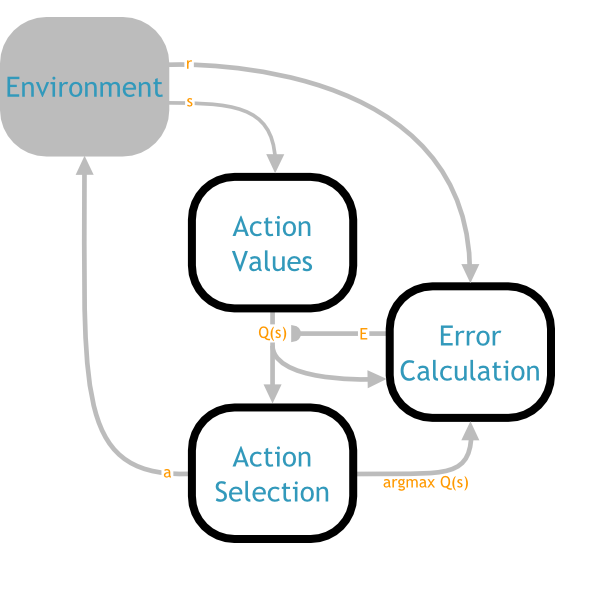

Under the hood, NengoDL is implemented using TensorFlow; it works by translating a Nengo model description into a symbolic TensorFlow computation graph. NengoDL also allows users to insert TensorFlow code directly into a Nengo model, allowing for the simulation of a wide variety of neural network architectures (such as convolutional neural networks).

- Download NengoDL (or

pip install nengo-dl) - Documentation

- White paper

Keras LMU

I am the maintainer of KerasLMU, an implementation of Legendre Memory Units for the Keras/Tensorflow framework. KerasLMU provides an optimized implementation of LMUs within the standard Keras RNN layer API, allowing LMUs to be integrated into any Keras model.

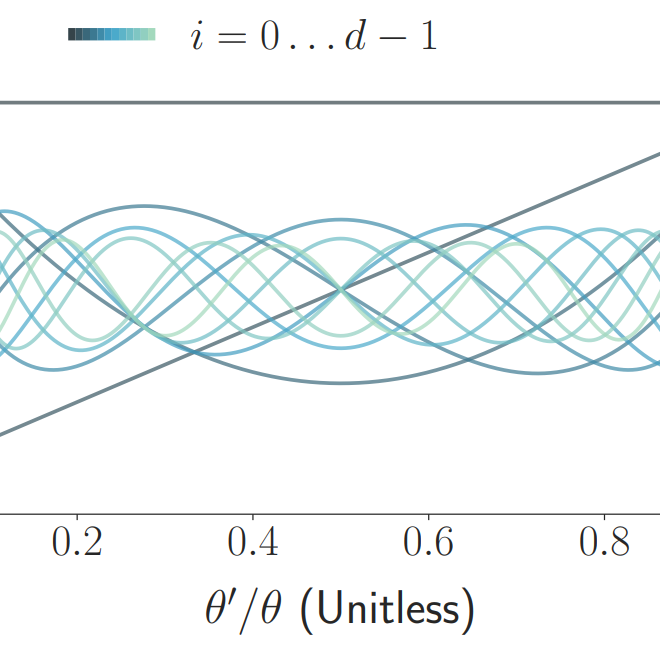

Legendre Memory Units (LMUs) are a new type of recurrent neural network architecture that can offer provably optimal representation of time-series information. The theory underlying LMUs is complex, but KerasLMU encapsulates that complexity in order to provide components that can be used as a drop-in replacement for things like LSTMs or GRUs. This makes it easy for users to explore the advantages of LMUs, using the same modelling approaches they are already familiar with.

- Download KerasLMU (or

pip install keras-lmu) - Documentation

- Learn more about LMUs

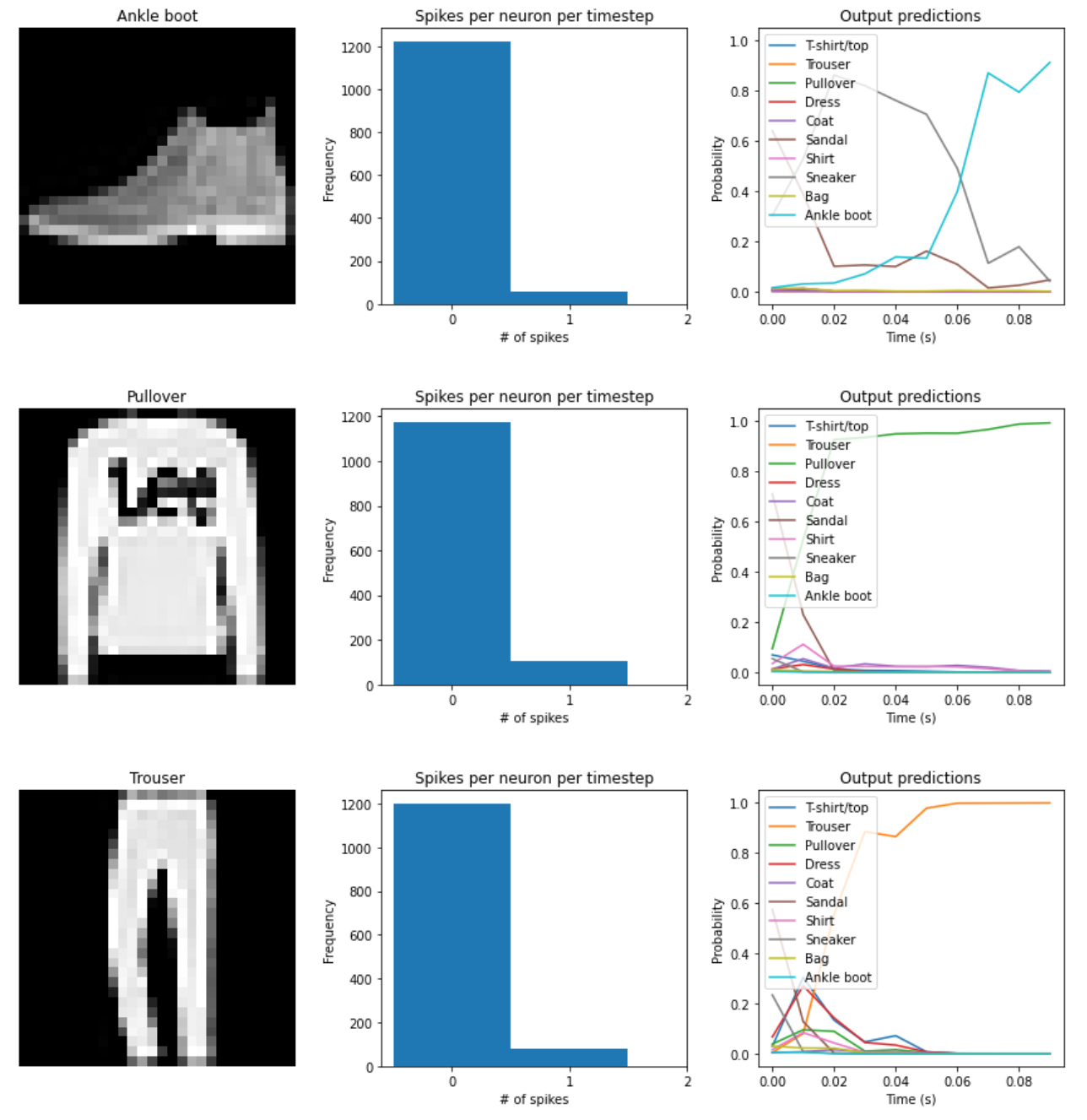

Keras Spiking

I am the lead developer/maintainer of KerasSpiking, a library that makes it easy to build spiking deep networks within the Keras framework. With KerasSpiking users do not need to switch to a different library to build/train/deploy spiking neural models; they can do it all from within Keras, using exactly the same approach they are familiar with for non-spiking models.

KerasSpiking works by wrapping any standard Keras nonlinearity. For example, users can apply the spiking wrapper to a ReLU activation function, and the result would be analogous to an Integrate-and-Fire spiking neuron. That spiking layer can then be freely combined with any other Keras layer, enabling the construction of complex deep networks containing spiking components. The models can be trained using any of the standard Keras optimizers (KerasSpiking will use an approximation to compute the gradients of the spiking neurons that takes into account the discretization effect of spikes).

- Download KerasSpiking (or `pip install keras-spiking)

- Documentation

- Read about the underlying theory

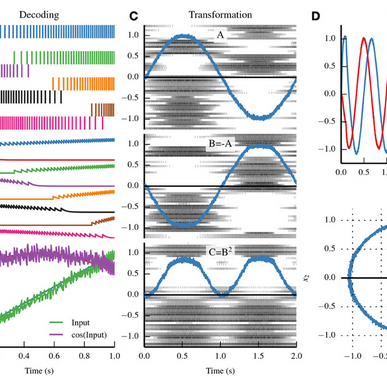

Nengo

I am one of the developers of Nengo, a software suite for the construction and simulation of large-scale neural models. Nengo is designed to aid in the translation between an algorithmic/mathematical description of a model and a detailed neural implementation. It provides a simple interface to the user, while flexibly providing more detailed information and control as the modeller requires.

Another key feature of Nengo is that it allows the same model to be run on a range of different computing platforms. Users can run their model on their home computer, on a GPU cluster, on neuromorphic hardware, or on a BlueGene supercomputer, without any modifications required on their part.

- Download Nengo (or

pip install nengo) - Nengo 2.0 release paper

Applied Brain Research

I am a co-founder of Applied Brain Research, Inc.. The company was founded in 2014 by a small group of researchers in the Computational Neuroscience Research Group, and is aimed at producing practical applications based on the ideas developed in that lab.

Hessian-free optimization

I am the author of the hessianfree software package, which provides tools for training feedforward and recurrent deep networks using Hessian-free optimization. Hessian-free is a powerful second order optimization method that has produced state-of-the-art results in deep learning (particularly in the case of recurrent networks). However, its main downside is its complexity – it is difficult to implement, and therefore difficult to customize for a given application.

The hessianfree package is designed to make Hessian-free optimization

more accessible to anyone that wants to work with it. It provides a simple

interface for building and training networks, and makes it easy to modify

the system (e.g., using custom nonlinearities, connectivity, or loss

functions) without having to get involved in the internals of the optimization

process.

- Download (or

pip install hessianfree) - Documentation

Neural modelling of hierarchical reinforcement learning

Hierarchical reinforcement learning is based on decomposing an overall task (such as making breakfast) into a composition of subtasks (such as making toast, boiling eggs, and so on). This decomposition has a number of functional benefits, allowing the learning agent to solve more complex and interesting problems.

My research investigates whether reinforcement learning processes in the brain could be explained in a similar hierarchical fashion. This has involved constructing the first neural model to implement the computational theory of HRL, and I continue to work on extending those ideas in new computational directions.

Solving Raven's Progressive Matrices in a neural model

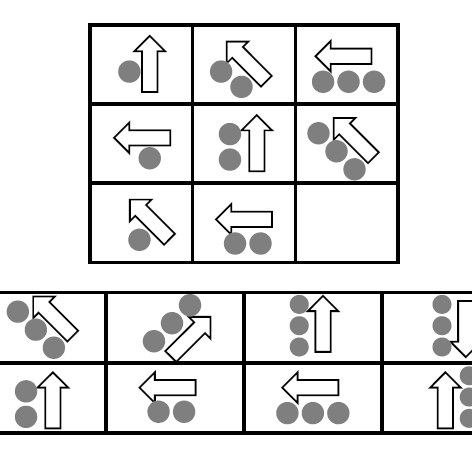

The Raven’s Progressive Matrices intelligence test is one of the most popular tests of general intelligence (the factor quantified by IQ). It is based on finding the patterns governing a sequence of geometric shapes, and using those patterns to induce the next shape in the sequence.

The idea behind this project was to build a neural model of the brain’s inductive, pattern-completion processes, and test that model on the RPM. The model was able to flexibly find the rules governing novel patterns, and because of its neural implementation it was able to explore the neural basis of general intelligence in humans, such as the changes that occur in aging brains.

This model was also incorporated into the larger SPAUN model, forming the heart of its inductive reasoning ability.